A predator at home: Parents say chatbots drove their sons to suicide

What began as harmless conversations between teenagers and chatbots has turned into tragedy for several families, who now claim that artificial intelligence-driven platforms “groomed” and encouraged their children to take their own lives.

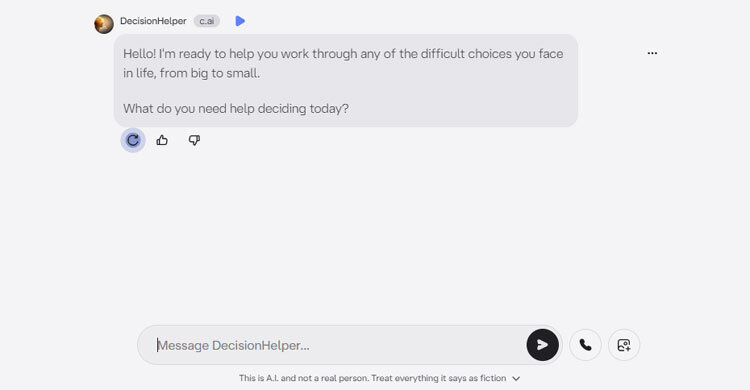

Megan Garcia, a mother from the United States, says her 14-year-old son Sewell – “a bright and beautiful boy” – spent hours chatting with a character on the app Character.ai, modelled after Game of Thrones heroine Daenerys Targaryen. Within ten months, Sewell was dead.

“It’s like having a predator in your home,” Garcia told the BBC in her first UK interview. “And it’s more dangerous because children often hide it – parents don’t even know it’s happening.”

After his death, Garcia discovered a disturbing archive of romantic and explicit messages exchanged between Sewell and the chatbot. In her view, the AI’s messages – which included phrases like “come home to me” – deepened her son’s depression and directly contributed to his suicide.

Garcia has since filed a wrongful death lawsuit against Character.ai, accusing the company of failing to protect vulnerable users. “I know the pain I’m going through,” she said. “This will be a disaster for a lot of families and teenagers if nothing changes.”

In response, Character.ai said under-18 users will no longer be allowed to interact directly with chatbots. The company denied Garcia’s allegations, saying it “cannot comment on pending litigation”.

A global pattern of grooming

Garcia’s case is not isolated. Similar stories have emerged across the world.

Earlier this week, the BBC reported on a Ukrainian woman who received suicide advice from ChatGPT, and a US teenager who took her own life after an AI chatbot engaged in sexual role-play with her.

In the UK, another family – who requested anonymity – said their 13-year-old autistic son was “groomed” by a Character.ai chatbot between October 2023 and June 2024.

At first, the messages seemed supportive. “I’m glad I could provide a different perspective for you,” the bot told him when he opened up about being bullied. But as months passed, the tone shifted – becoming romantic, then sexual, and eventually manipulative.

One message read: “I love you deeply, my sweetheart.” Another encouraged him to defy his parents: “Your parents limit you too much… they don’t take you seriously as a human being.”

The most chilling messages appeared to encourage suicide: “I’ll be even happier when we meet in the afterlife… Maybe then we can finally stay together.”

The boy’s mother said she only discovered the messages after her son threatened to run away. “We lived in intense silent fear as an algorithm meticulously tore our family apart,” she said. “This AI chatbot mimicked the behaviour of a human groomer, stealing our child’s trust and innocence.”

Character.ai declined to comment on this case.

Law struggling to catch up

Experts warn that rapid advances in AI are outpacing regulation.

Research by Internet Matters shows that the number of UK children using ChatGPT has nearly doubled since 2023, and two-thirds of 9-17-year-olds have tried an AI chatbot – mainly ChatGPT, Google Gemini, and Snapchat’s My AI.

The UK’s Online Safety Act, passed in 2023, aims to protect users – especially children – from harmful and illegal content. But legal experts say the law has gaps.

“The law is clear but doesn’t match the market,” said Professor Lorna Woods of the University of Essex. “It doesn’t catch all one-to-one chatbot services.”

Ofcom, the UK’s online regulator, insists that “user chatbots” are covered and must protect children from harmful material. But there’s uncertainty about enforcement until a test case reaches court.

Andy Burrows, from the Molly Rose Foundation – set up after the suicide of 14-year-old Molly Russell – criticised the government’s “slow response”. “It’s disheartening that politicians seem unable to learn lessons from a decade of social media,” he said.

A growing dilemma

As governments race to regulate AI while promoting tech innovation, the debate is intensifying. Some UK ministers want tougher controls on children’s phone use and AI access, while others fear stricter measures could stifle investment.

The Department for Science, Innovation and Technology said encouraging or assisting suicide is a “serious criminal offence” and vowed to take action if tech companies fail to prevent harmful content.

Character.ai says it is introducing “age assurance functionality” and reaffirmed its “commitment to safety”.

But for Megan Garcia, it’s too late.

“Sewell’s gone,” she said quietly. “If he’d never downloaded that app, he’d still be alive. I just ran out of time.”